Learning from Failed Experiments: The Path to Production AI Success

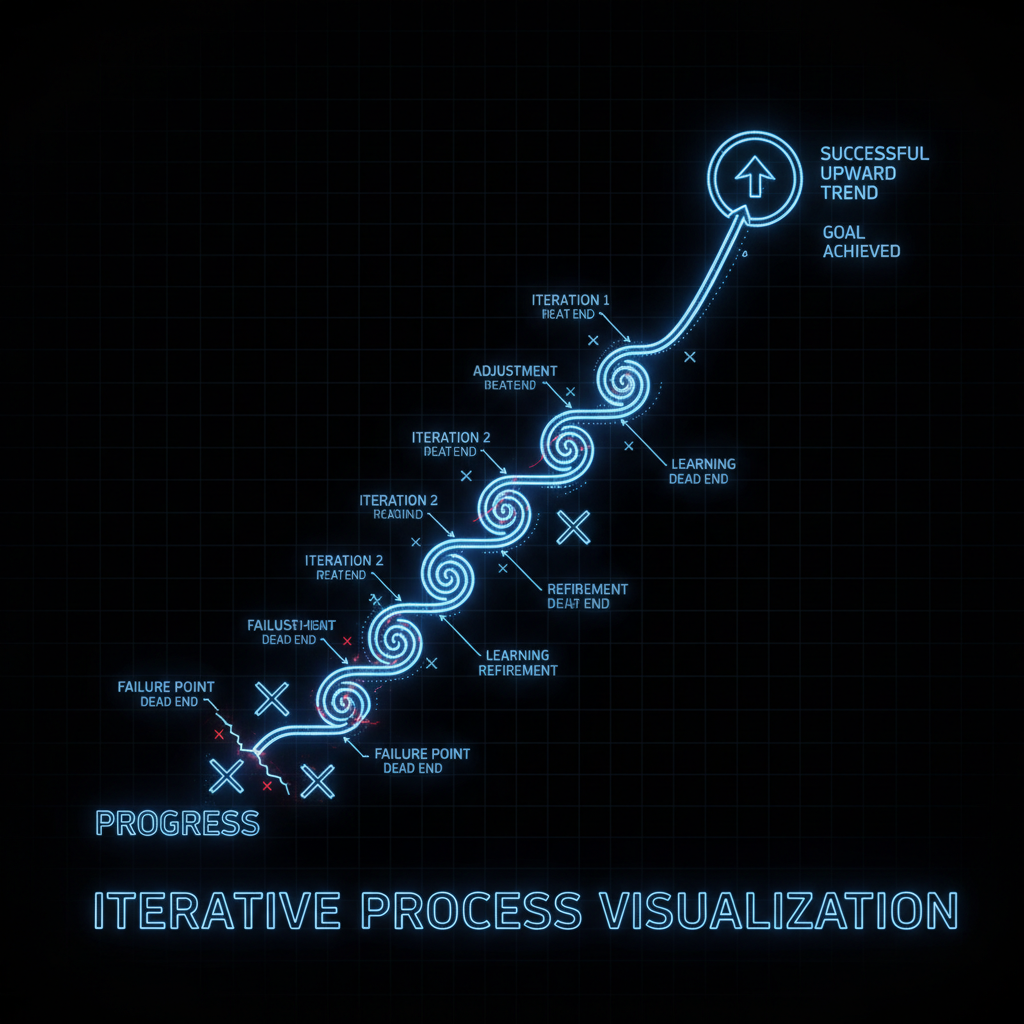

Our failures teach us more than our successes. The teams that excel aren’t those that avoid failure - they’re those that fail fast, learn systematically, and iterate relentlessly.

Reframing Failure in AI Development#

In traditional software, bugs are failures. In AI development, most experiments fail, and that’s not just acceptable - it’s essential. The key distinction is between:

- Productive failures: Experiments that conclusively prove an approach won’t work

- Wasteful failures: Repeated mistakes from not capturing lessons learned

- System failures: Production issues that impact users

Each requires different responses and offers different learning opportunities.

The Experiment-Failure-Learning Cycle#

Case Study: Recommendation System Evolution#

Our recommendation system’s journey illustrates the power of systematic failure:

Version 1.0: Collaborative Filtering (Failed)

- Hypothesis: User similarity drives preferences

- Result: 45% click-through rate (CTR)

- Failure: Cold start problem killed new user experience

- Learning: Need content-based features for new users

Version 2.0: Deep Learning Everything (Failed)

- Hypothesis: Neural networks will find optimal patterns

- Result: 52% CTR but 300ms latency

- Failure: Too slow for production requirements

- Learning: Performance constraints matter more than accuracy

Version 3.0: Hybrid Approach (Succeeded)

- Hypothesis: Combine simple models with selective deep learning

- Result: 68% CTR with 50ms latency

- Success: Met both accuracy and performance requirements

- Learning: Pragmatic solutions beat pure approaches

Version 4.0: Real-time Personalization (In Progress)

- Building on lessons from V1-3

- Early results: 71% CTR in A/B tests

Each “failure” provided critical insights that informed the next iteration.

Creating a Culture of Productive Failure#

1. Experiment Documentation#

We maintain an experiment registry that captures:

class ExperimentRecord:

def __init__(self):

self.hypothesis = "" # What we believed

self.approach = "" # How we tested it

self.metrics = {} # Quantitative results

self.duration = 0 # Time invested

self.outcome = "" # Success/Failure/Partial

self.lessons = [] # Key takeaways

self.next_steps = [] # What to try next

def to_markdown(self):

return f"""

## Experiment: {self.hypothesis}

**Date**: {datetime.now()}

**Duration**: {self.duration} days

**Outcome**: {self.outcome}

### Approach

{self.approach}

### Results

{json.dumps(self.metrics, indent=2)}

### Lessons Learned

{chr(10).join(f'- {lesson}' for lesson in self.lessons)}

### Next Steps

{chr(10).join(f'- {step}' for step in self.next_steps)}

"""

2. Failure Post-Mortems (Without Blame)#

When experiments fail, we conduct structured reviews:

## Post-Mortem: Feature Engineering Pipeline Failure

### What Happened

- New feature extraction code caused training to fail

- 3 days of GPU time wasted

- Delayed model update by 1 week

### Root Cause

- Inadequate validation of feature distributions

- No alerting on training anomalies

- Insufficient test coverage for edge cases

### What Went Well

- Team identified issue within 4 hours

- Rollback procedure worked perfectly

- No production impact

### Action Items

1. Add distribution validation to feature pipeline

2. Implement training anomaly detection

3. Increase test coverage to 90%

4. Create feature engineering checklist

### Lessons for Team

- Always validate data assumptions

- Monitor everything, especially during experiments

- Fast failure detection is more valuable than prevention

3. Celebrating Intelligent Failures#

We actively celebrate failures that prevent larger issues:

- “Fast Fail Award”: Monthly recognition for quickly identifying doomed approaches

- “Learning Champion”: Team member who extracts most insights from failures

- “Pivot Master”: Successfully changing direction based on failure signals

Technical Infrastructure for Failure Management#

Experiment Tracking System#

import mlflow

from typing import Dict, Any

import traceback

class ExperimentManager:

def __init__(self, project_name: str):

self.project = project_name

mlflow.set_experiment(project_name)

def run_experiment(self,

name: str,

hypothesis: str,

config: Dict[str, Any],

train_func: callable):

"""

Safely run experiments with automatic failure handling

"""

with mlflow.start_run(run_name=name) as run:

mlflow.log_param("hypothesis", hypothesis)

mlflow.log_params(config)

try:

# Run the experiment

metrics = train_func(config)

mlflow.log_metrics(metrics)

mlflow.log_param("outcome", "success")

return {"status": "success", "metrics": metrics}

except Exception as e:

# Log failure details

mlflow.log_param("outcome", "failed")

mlflow.log_param("error_type", type(e).__name__)

mlflow.log_text(traceback.format_exc(), "error_trace.txt")

# Extract learnings from failure

learnings = self.analyze_failure(e, config)

mlflow.log_dict(learnings, "failure_analysis.json")

return {"status": "failed", "error": str(e), "learnings": learnings}

def analyze_failure(self, error: Exception, config: Dict) -> Dict:

"""Extract learnings from failures"""

analysis = {

"error_type": type(error).__name__,

"likely_cause": self.diagnose_error(error),

"config_issues": self.check_config(config),

"recommendations": self.suggest_fixes(error, config)

}

return analysis

Automated Failure Detection#

class FailureDetector:

def __init__(self, thresholds: Dict[str, float]):

self.thresholds = thresholds

self.alert_channel = "#ml-experiments"

def monitor_training(self, metrics_stream):

"""Detect failures early in training"""

for step, metrics in enumerate(metrics_stream):

# Check for NaN/Inf

if np.isnan(metrics['loss']) or np.isinf(metrics['loss']):

self.alert(f"Training diverged at step {step}")

return "divergence"

# Check for stuck training

if step > 100 and metrics['loss'] > self.thresholds['initial_loss']:

self.alert(f"No learning detected after {step} steps")

return "no_learning"

# Check for overfitting

if metrics.get('val_loss', 0) > 2 * metrics['train_loss']:

self.alert(f"Severe overfitting detected at step {step}")

return "overfitting"

return "completed"

Learning Patterns from Failures#

Common Failure Modes in AI Systems#

After analyzing hundreds of failed experiments, patterns emerge:

1. Data Quality Issues (40% of failures)#

# Preventive measures

def validate_dataset(df):

checks = {

'nulls': df.isnull().sum().sum() == 0,

'duplicates': df.duplicated().sum() == 0,

'distribution': scipy.stats.normaltest(df.select_dtypes(include=[np.number]))[1] > 0.05,

'outliers': (np.abs(stats.zscore(df.select_dtypes(include=[np.number]))) < 3).all().all()

}

failures = [check for check, passed in checks.items() if not passed]

if failures:

raise DataQualityError(f"Failed checks: {failures}")

2. Overfitting (25% of failures)#

# Early stopping with patience

early_stopping = EarlyStopping(

monitor='val_loss',

patience=10,

restore_best_weights=True,

verbose=1

)

# Regularization strategies

model = build_model(

dropout_rate=0.3,

l2_penalty=0.01,

batch_norm=True

)

3. Infrastructure Issues (20% of failures)#

# Resource monitoring

@contextmanager

def resource_monitor(experiment_name):

start_memory = psutil.Process().memory_info().rss / 1024 / 1024

start_time = time.time()

try:

yield

finally:

end_memory = psutil.Process().memory_info().rss / 1024 / 1024

duration = time.time() - start_time

if end_memory - start_memory > 10000: # 10GB increase

log_warning(f"{experiment_name} used {end_memory - start_memory}MB")

if duration > 3600: # 1 hour

log_warning(f"{experiment_name} took {duration/3600:.1f} hours")

Turning Failures into Features#

Sometimes, understanding why something fails leads to breakthrough insights:

Example: The “Failed” Anomaly Detector#

We built an anomaly detector that had a 15% false positive rate - too high for production. Instead of discarding it, we analyzed the false positives and discovered they predicted user churn with 84% accuracy. The “failed” anomaly detector became our successful churn prediction model.

def analyze_false_positives(predictions, ground_truth, user_data):

"""

Analyze what false positives might actually indicate

"""

false_positives = (predictions == 1) & (ground_truth == 0)

fp_users = user_data[false_positives]

# Check if FPs correlate with other behaviors

correlations = {

'churned_within_30_days': fp_users['churned_30d'].mean(),

'decreased_activity': fp_users['activity_decrease'].mean(),

'support_tickets': fp_users['support_contacts'].mean()

}

# False positives might be true positives for different problem

return correlations

Building Resilient Systems#

Graceful Degradation#

Design systems that fail gracefully:

class ModelServer:

def __init__(self):

self.primary_model = load_model('production_v2')

self.fallback_model = load_model('production_v1')

self.simple_baseline = SimpleHeuristic()

def predict(self, request):

try:

# Try primary model

return self.primary_model.predict(request)

except Exception as e:

log_error(f"Primary model failed: {e}")

try:

# Fall back to previous version

return self.fallback_model.predict(request)

except Exception as e2:

log_error(f"Fallback model failed: {e2}")

# Use simple heuristic as last resort

return self.simple_baseline.predict(request)

Canary Deployments#

Test in production safely:

def canary_deployment(new_model, traffic_percentage=0.01):

"""

Gradually roll out new model

"""

results = {

'new_model_metrics': [],

'old_model_metrics': [],

'errors': []

}

for request in request_stream:

if random.random() < traffic_percentage:

# Route to new model

try:

prediction = new_model.predict(request)

results['new_model_metrics'].append(

measure_performance(prediction, request)

)

except Exception as e:

results['errors'].append(e)

prediction = old_model.predict(request)

else:

prediction = old_model.predict(request)

if len(results['errors']) > ERROR_THRESHOLD:

rollback()

break

return results

Metrics for Learning Culture#

Track how well your team learns from failures:

class LearningMetrics:

def calculate_team_learning_rate(self):

metrics = {

'experiment_velocity': experiments_per_week,

'failure_recovery_time': mean_time_to_recovery,

'lesson_application_rate': lessons_applied / lessons_documented,

'repeat_failure_rate': repeated_failures / total_failures,

'innovation_index': novel_approaches / total_experiments

}

return metrics

def learning_efficiency_score(self):

"""

Higher score = better at learning from failures

"""

score = (

self.lesson_application_rate * 0.3 +

(1 - self.repeat_failure_rate) * 0.3 +

self.innovation_index * 0.2 +

min(self.experiment_velocity / 10, 1) * 0.2

)

return score

Related Practices#

Learning from failures connects to broader development practices. XP 3.0 emphasizes TDD as guardrails for AI-generated code - catching failures early before they reach production. Strategic prioritization helps teams focus on high-value experiments, reducing wasteful failures while embracing productive ones.

The teams that ship successful AI products:

- Fail fast and cheap through rapid experimentation

- Document failures systematically to extract maximum learning

- Celebrate intelligent failures that prevent larger issues

- Build infrastructure that expects and handles failures gracefully

- Create culture where failure is seen as data, not defeat

Every failed experiment narrows the search space for success. Every production issue teaches about real-world constraints. Every mistaken hypothesis refines understanding.