Prioritization in AI Product Development: The Art of Strategic No

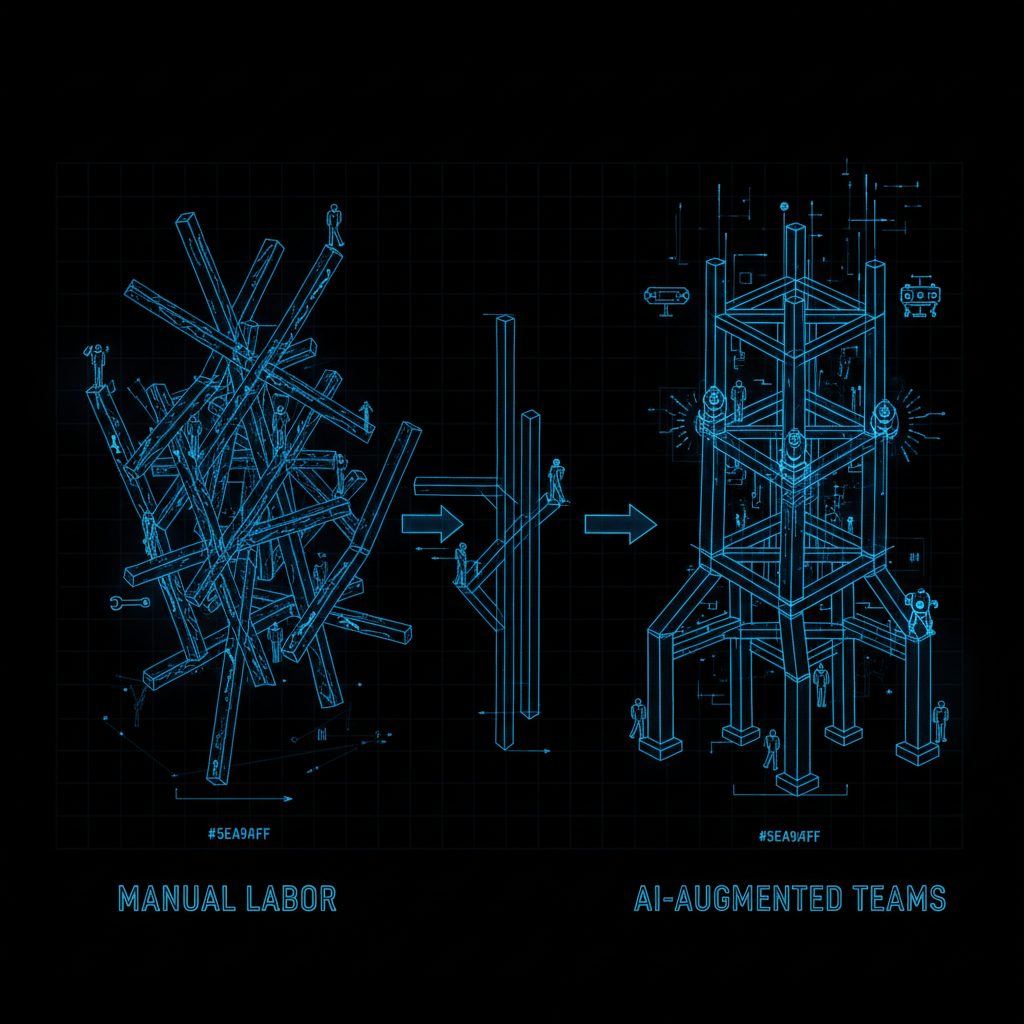

Building production AI systems requires intense focus. Every new feature, every experiment, every optimization competes for limited resources - engineer time, GPU hours, and cognitive bandwidth. The teams that ship successful products aren’t those that do everything; they’re those that master the discipline of not doing.

The Mathematics of Focus

Consider a typical AI team’s potential workload:

class ProjectLoad:

def __init__(self):

self.potential_projects = [

"Implement transformer architecture",

"Build real-time inference pipeline",

"Create data labeling platform",

"Optimize model for edge deployment",

"Develop explainability dashboard",

"Refactor feature engineering pipeline",

"Implement A/B testing framework",

"Build model monitoring system",

"Create automated retraining pipeline",

"Develop custom loss functions"

]

def calculate_completion_rate(self, projects_attempted):

capacity = 100 # Team capacity units

effort_per_project = 30 # Average effort units

context_switching_cost = 5 * (projects_attempted - 1)

actual_capacity = capacity - context_switching_cost

completion_rate = min(1.0, actual_capacity / (projects_attempted * effort_per_project))

return {

'projects_attempted': projects_attempted,

'completion_rate': completion_rate,

'projects_completed': int(projects_attempted * completion_rate)

}

# Results:

# 2 projects: 95% completion = 2 completed

# 5 projects: 60% completion = 3 completed

# 10 projects: 20% completion = 2 completed

Attempting everything guarantees completing nothing of value.